I’ve been extremely pleased with Collada and the ColladaMaya plugin. It has allowed me to get at all the data I want with much less overhead than using the Maya API to get the same data. I spent a few weeks switching the asset pipeline over to use Collada hoping the time spent would eventually be made up in the future. This last week I was able to add awesome new functionality to the asset pipeline, effectively I have already made back my minor investment in time.

Materials

The focus of the past week has been spent supporting material creation directly in Maya. I wanted to see what ColladaFX was all about. Long story short, ColladaFX is an invaluable tool for working with hardware shaders in Maya.

Using ColladaFX is very simple. Briefly it goes something like this:

- Create a ColladaFX material in Maya

- Select the Cg vertex and fragment shader files

- Assign and manipulate shader parameters

I have been using Cg for hardware shaders in the Engine, so it was especially easy to migrate the shaders into a ColladaFX material. I created a simple tool that finds all the materials in a Collada file and writes Catharsis Engine format materials. It has drastically simplified creating and testing materials. If it works in Maya as a ColladaFX material, it is almost guaranteed to work identically in Catharsis. It sure beats the hell out of editing an XML material definition or spending months creating an interactive shader editor.

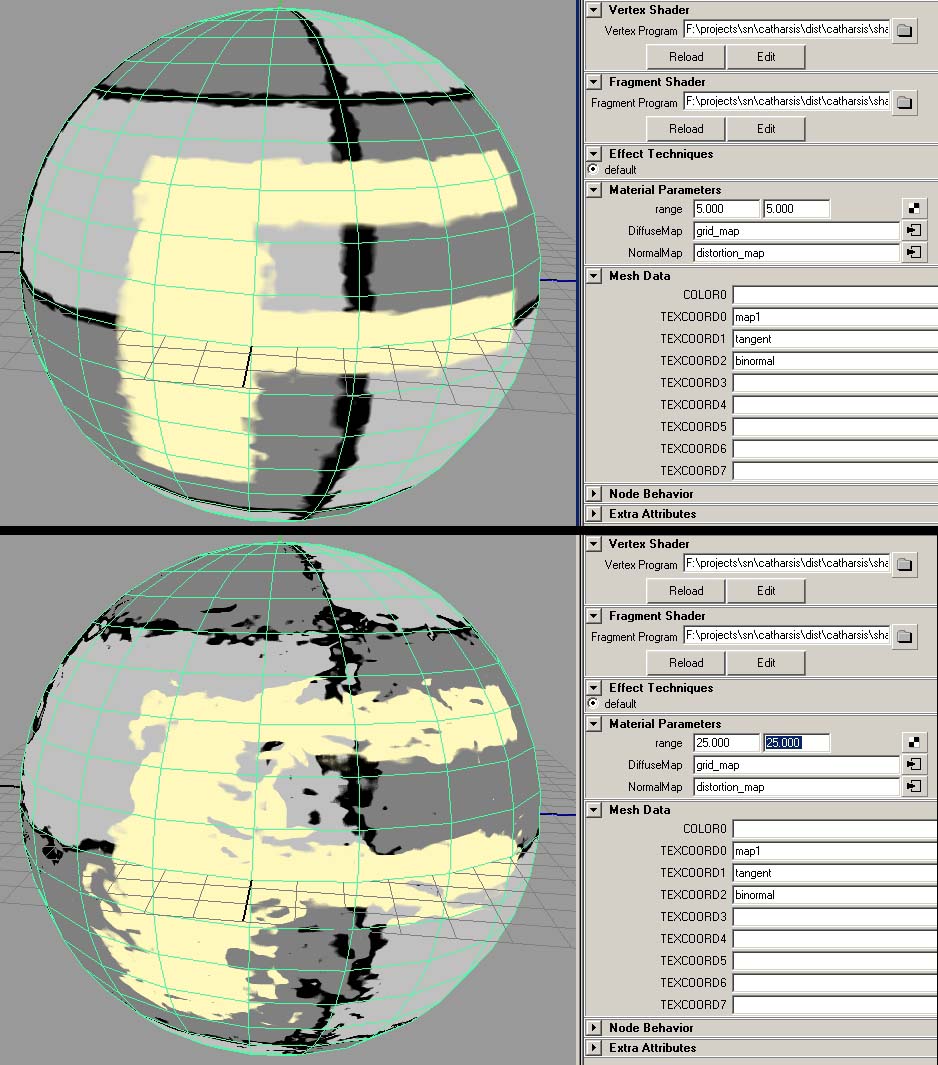

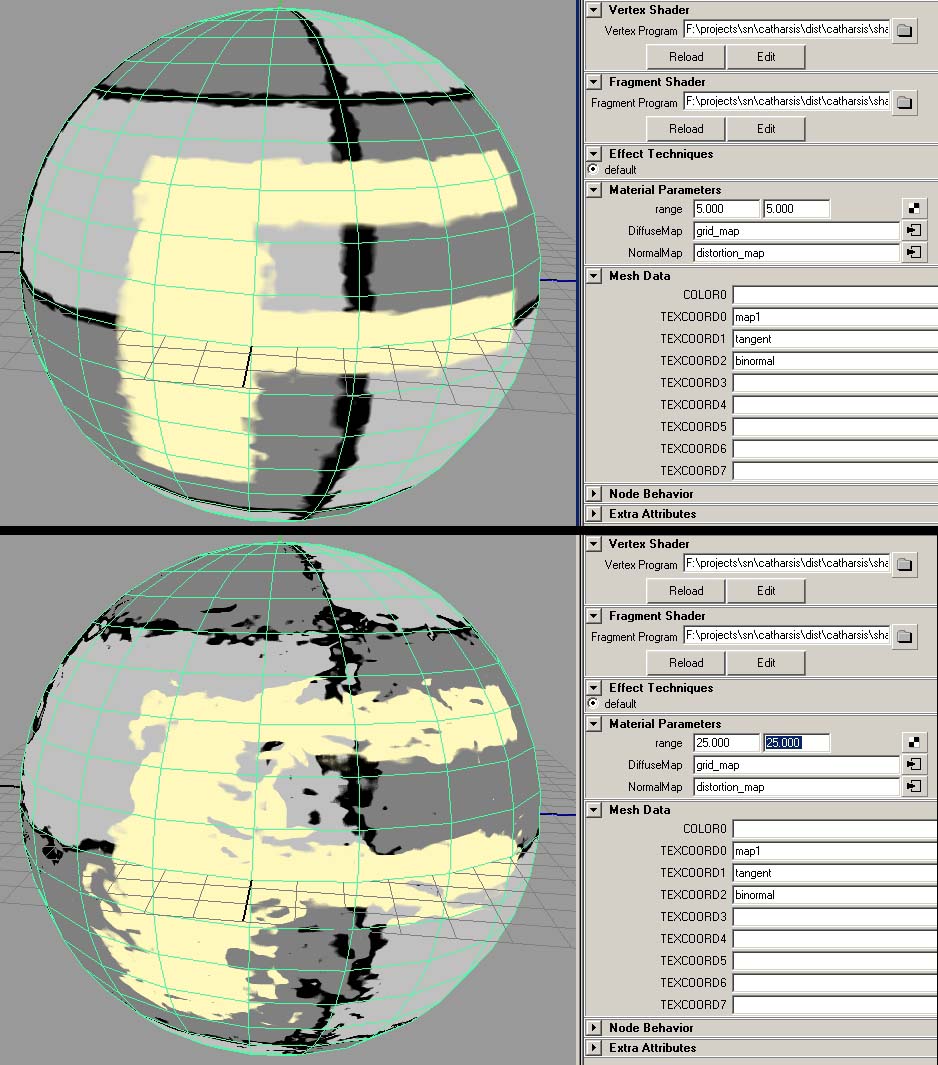

An example of a simple distortion shader in Maya using ColladaFX. Between the two images, the range parameter has been increased so the distortion increased.

A really cool feature of ColladaFX materials is that arbitrary vertex data can be bound to shader parameters. In the above example the map1, Maya’s default texture coordinates, are bound to TEXCOORD0 in the shader. In the shader I then specify that TEXCOORD0 binds to the variable TexCoord0 which is then used in the shader program. I could create a new vertex data set in Maya called ‘distort_intensity’ which would modify how distorted the texture is over the surface. Then I could paint the values of ‘distort_intensity’ on the mesh in Maya. Then I would associate the ‘distort_intensity’ per-vertex data set with TEXCOORD3 and add the functionality in the Cg shaders.

This is excellent, but it would have been really cool for the per-vertex data names to be parsed out of shader file like is done in Material Parameters. The shaders would be self documented, as it wouldn’t be more apparent what the per-vertex data is used for in the shader.

Collision

A couple weeks ago I began to look at adding collision volumes to the Collada asset pipeline. I thought that the physics functionality was a part of the standard ColladaMaya plugin. I quickly learned that Feeling Software had created a completely separate (and MUCH more powerful) Maya plugin for physics called Nima. The Nima plugin offers ways to create and simulate many standard physics objects. It currently supports rigid bodies, cloth, and rag dolls. The physics objects can even be interactively manipulated during simulation (IE: You can grab a skeleton and it’ll flop around as you move it in Maya).

All of it exports to Collada, alleviating my collision volumes export needs. It will be interesting to see what other physics objects I can support in the future.

Boo!

Last night, Halloween festivities were held on State Street. This year tickets were required for State Street and the entire event was shut down promptly at 1:30am.

Though wholly disinterested in the celebrations someone threw a costume at me. In moments I was as seen below. I did some programming so attired, delighting Gavin in the process. The costume lasted for about two hours until I nearly passed out because the hat breathed like a plastic freezer bag.

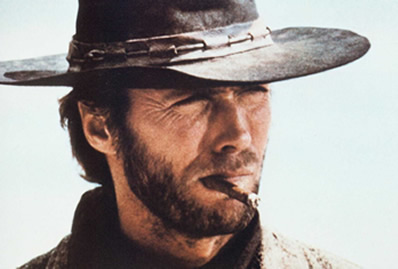

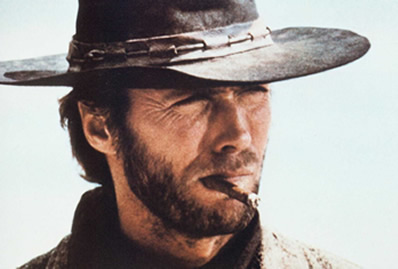

In case you’re wondering, I am supposed to be The Man with No Name. Turns out I inadvertantly dressed up as a jackass.

The Man with No Name